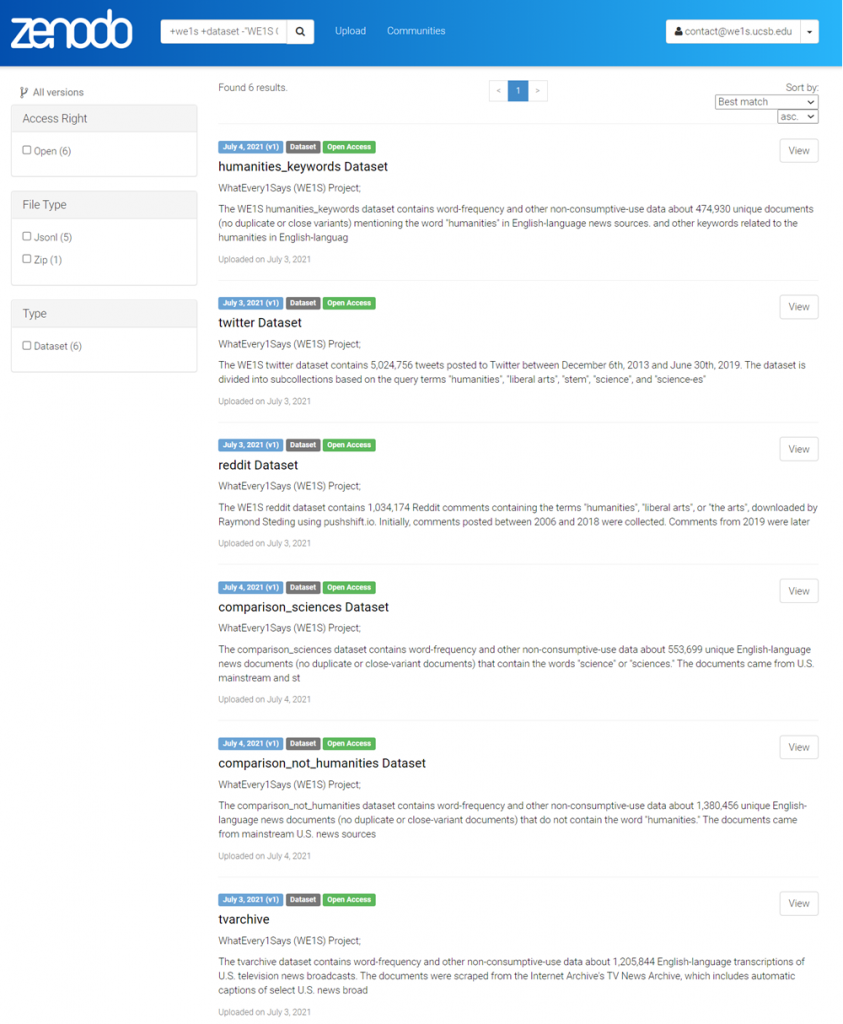

So that other researchers can fully benefit from the data we harvested and processed from the materials we studied, we deposited our full datasets in the Zenodo repository operated by CERN to promote “open science.” These datasets (six in total) hold all the data and metadata from which we extracted our “collections” for modeling. Datasets include all raw data, if available in the public domain. Otherwise, they include only extracted features we collected for non-consumptive use in our analyses. These datasets represent all documents collected by the project. By contrast, our “collections” are subsets of these datasets filtered by keyword, source, or document type to help us address particular research questions. For instance, our Collection 1 (C-1) is a subset of data from our humanities_keywords dataset of 474,930 unique documents mentioning the word “humanities” (and phrases related to the humanities such as “liberal arts” or “the arts”). C-1 focuses on mainstream U.S. journalism, reducing the set to 82,324 articles mentioning “humanities” from 850 U.S. news sources. Other “collections” focus just on top-circulation newspapers, student newspapers, and so on, whose data is drawn from the same dataset or different datasets.

Data sources

Our primary source for news articles was LexisNexis Academic (via the LexisNexis “Web Services Kit” API), supplemented by other databases of news and other materials. Using our Chomp web-scaping tool, we also directly harvested born-digital news and additional texts from the web; and using our social-media collection tools, we gathered about 5 million posts from Twitter and 1 million posts from Reddit mentioning the “humanities” and related terms.

Data selection and processing

All documents in our datasets were pre-processed into a common format with extracted features stored in a “features” table. After feature extraction, we removed raw textual data not in the public domain, and we randomized the features table to prevent recovery of the original text. All original tokens were preserved, including punctuation and line breaks. Filtering of the data for punctuation, stop words, or other features only occurred when later in our “collections” when needed for analytical processes.

The is an overview of the steps in our pre-processing algorithm:

- Texts were de-duplicated algorithmically (eliminating exact and close-variant copies of documents)

- Texts were normalised to Unicode.

- Accents were stripped.

- Text was tokenized and labeled using SpaCy v2.2 with its default settings, except as noted below.

- A “features” table was created with rows for each token. Tokens were labelled by the following:

- Part of Speech tags (using Universal Dependencies)

- Part of Speech tags (using Penn Treebank labels)

- Lower-cased forms of tokens

- Lemmas (“humanities” was lemmatized as “humanities”, not “humanity”)

- Stopwords or not (according to the WE1S list of stopwords)

- Named entities (except those categorised by spaCy as ‘CARDINAL’, ‘DATE’, ‘QUANTITY’, and ‘TIME’

- Dates which are names of months were labelled as entities

- For phrasal entities (e.g. United States of America), each token was labelled as the beginning of the entity or inside the entity

- Flesch-Kincaid Readability, Flesch-Kincaid Reading Ease, and Dale-Chall readability scores were calculated.

humanities_keywords

The WE1S humanities_keywords dataset contains word-frequency and other non-consumptive-use data about 474,930 unique documents (no duplicate or close variants) mentioning the word “humanities” in English-language news sources. and other keywords related to the humanities in English-language news sources. Other keywords include “liberal arts,” “the arts,” “literature,” “history,” and “philosophy.” The documents came from 850 U.S. and 437 international news sources with their associated blogs (including student newspapers) published mostly during 1989-2019.The word “humanities” occurs XXX times in the dataset. WE1S and other researchers use this data to look for broad patterns and to help guide closer study. For example, WE1S uses a subset of this dataset (its Collection 1, including only U.S. news sources) to address many research questions about public discourse on the humanities in the U.S. (and to compare with other subsets of this dataset.)

comparison_not_humanities

The comparison_not_humanities dataset contains word-frequency and other non-consumptive-use data about 1,380,456 unique English-language news documents (no duplicate or close-variant documents) that do not contain the word “humanities.” The documents came from mainstream U.S. news sources published during 2000-2019. WE1S researchers use this data for context to better understand the place of documents in public discourse that do mention the word “humanities” (such as in WE1S’s humanities_keywords dataset). For example: we know that only a small fraction of articles from newspapers contain the word “humanities.” But how small is this fraction?

WE1S gathered this data using keyword searches of 3 of the most common words in the English language (based on a well-known analysis of the Oxford English Corpus) that LexisNexis indexes and thus makes available for search: “person,” “say,” and “good”. We took data from the top 15 circulating newspapers in the U.S. from 2000-2019, randomly selecting 1 month per year for each keyword in order to limit results to more manageable numbers (each year searched therefore includes data from 3 months of that year). We also took data from every other LexisNexis source from which we had gathered data for our humanities_keywords dataset. (We were not able fully to replicate previous searches, however, so some sources do not have comparison data.) For this purpose, we focused on the years 2013-2019 and randomly selected 1 month per year for each keyword in order to limit results. To exclude articles containing the word “humanities” from the results, we searched within each of our selected sources for articles containing “person AND NOT humanities,” “say AND NOT humanities,” and “good AND NOT humanities.” This search included the plural forms of each of these words, so documents in this dataset may contain the words “persons,” “people,” “says,” and “goods.”

comparison_sciences

The comparison_sciences dataset contains word-frequency and other non-consumptive-use data about 553,699 unique English-language news documents (no duplicate or close-variant documents) that contain the words “science” or “sciences.” The documents came from U.S. mainstream and student news sources published during 1977-2019 (though mostly from 1985-2019). WE1S researchers use this data to understand how public discourse about the humanities compares to public discourse about science.

We gathered this data using keyword searches for “science,” which found articles containing either (or both) the words “science” and “sciences.” We took data from the top 10 circulating newspapers in the U.S. and from University Wire sources (student newspapers). Documents in this dataset may also contain the word “humanities,” just as documents in the humanities_keyword dataset may contain the words “science” or “sciences.”

twitter

twitter

The WE1S twitter dataset contains 5,024,756 tweets posted to Twitter between December 6, 2013, and June 30, 2019. The dataset is divided into subcollections based on the query terms “humanities”, “liberal arts”, “stem”, “science”, and “science(s)” (a query for the presence of either “science” or “sciences”). The number of tweets in each subcollection is as follows:

- humanities: 1,705,038

- liberal-arts: 7,663

- stem: 865,156

- science: 2,089,985

- science-es: 356,914

The tweets are distributed over the following date range:

- 2013: 16,335

- 2014: 862,746

- 2015: 1,711,823

- 2016: 947,561

- 2017: 976,971

- 2018: 3,24,133

- 2019: 185,187

Collectively, the tweets represent the work of 1,886,739 distinct usernames. We recorded each tweet’s mentions, hashtags, and links, as well the number of likes and retweets. Unlike most other WE1S datasets, our twitter dataset does not contain extracted features. Instead, it contains the original text of each tweet, along with a “tidy tweet”, which represents the tweet after preprocessing. We preprocessed tweets using a modified form of the our preprocessing algorithm. Details can be found in the WE1S TweetSuite repository.

reddit

The WE1S reddit dataset contains 1,034,174 Reddit comments containing the terms “humanities”, “liberal arts”, or “the arts”, downloaded by Raymond Steding using pushshift.io. Initially, comments posted between 2006 and 2018 were collected. Comments from 2019 were later added.

This data has been processed using the WhatEvery1Says preprocessor, and, in addition to metadata downloaded from Reddit, sentiment scores generated with Textblob have been recorded.

A description of the process at an early stage in the production of this dataset can be found in Steding’s blog post “A Digital Humanities Study of Reddit Student Discourse about the Humanities”.

tvarchive

The tvarchive dataset contains word-frequency and other non-consumptive-use data about 1,205,844 English-language transcriptions of U.S. television news broadcasts. The documents were scraped from the Internet Archive’s TV News Archive, which includes automatic captions of select U.S. news broadcasts since 2009. While the complete TV News Archive contains over 2.2 million transcripts, WE1S researchers were only able to collect about 1.2 million documents containing complete transcripts. The full TV News Archive includes transcripts from 33 networks and hundreds of shows. Unlike other WE1S datasets, the tvarchive dataset was not collected using keyword searches for specific terms (i.e., documents containing the word “humanities”).