We make available for download our datasets, collections, topic models, and visualizations so that others can reproduce our research or use it for their own exploration. But except for texts that we directly harvested from public and open sources, we do not make available the underlying texts.

For a quick start on our datasets, see the descriptions on our Datasets page. For a quick start on our collections with their associated topic models and visualizations, see the summary cards on our Collections page.

Datasets

Datasets

The WE1S corpus (body of materials) consists of over 1 million (1,028,629) unique English-language journalistic media articles and related documents mentioning the literal word “humanities” (and for some research purposes also the “liberal arts,” “the arts,” and “sciences”) from 1,053 U.S. and 437 international news and other sources from the 1980s to 2019, mostly after 2000 when news media began producing digital texts en masse. For comparison and other uses, we also gathered about 1.38 million unique documents representing a random sampling of all news articles. In addition, we harvested over 6 million posts mentioning the “humanities” and related terms from social media (about 5 million from Twitter and 1 million from Reddit); and about 1.2 million transcripts of U.S. television news broadcasts from those available in the Internet Archive.

Our primary source for news articles is LexisNexis Academic (via the LexisNexis “Web Services Kit” API), supplemented by other databases of news and other materials. We also directly harvested born-digital news and additional texts from the web, and posts from social media.

So that other researchers can fully benefit from the data we derived from these materials, we deposited our full datasets (six in total) in the Zenodo repository operated by CERN to promote “open science.” (These do not include readable original texts except for those we directly harvested from open and public sources.) (See our Datasets page for more information and access to the Zenodo deposits.)

Collections

Collections

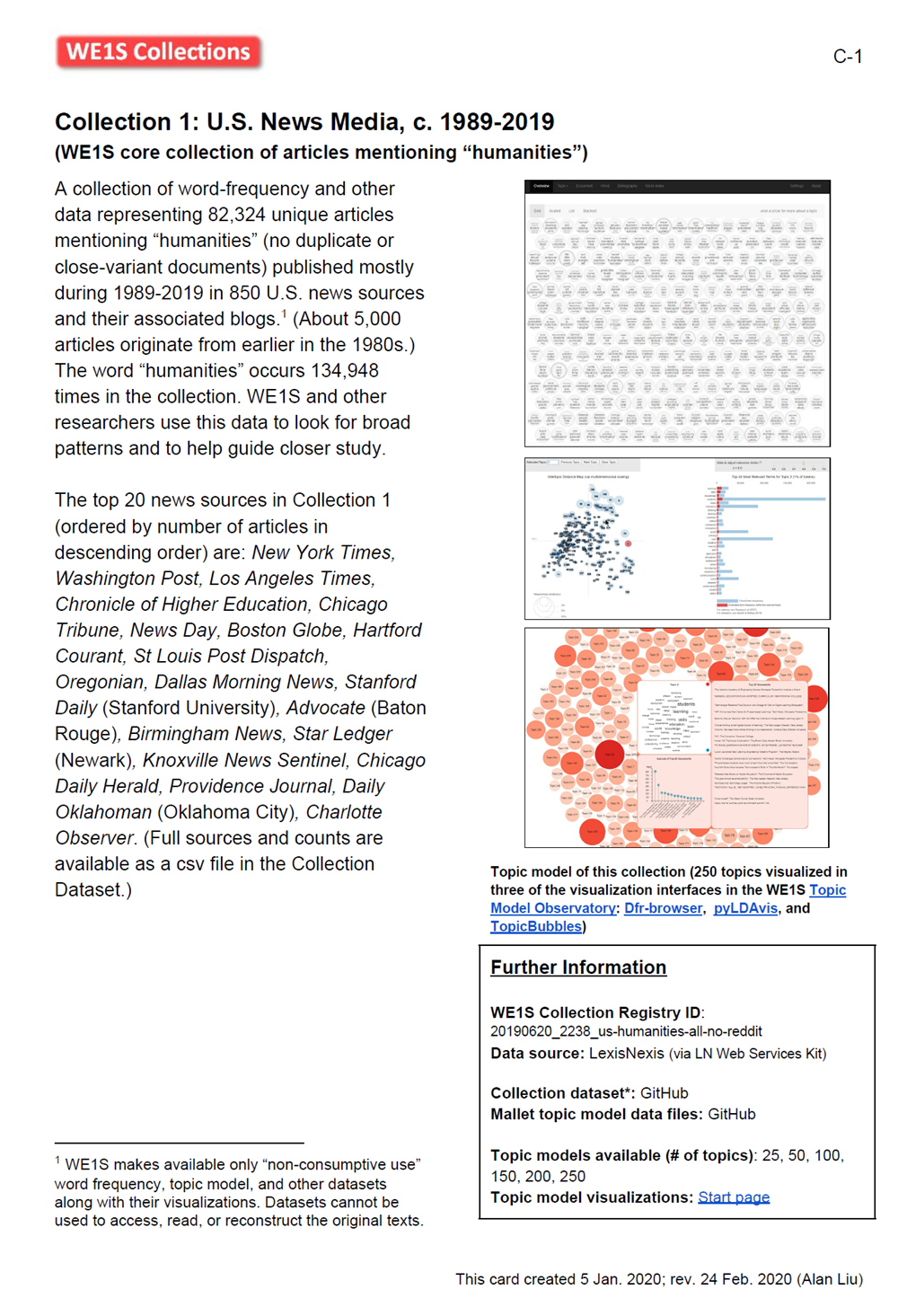

Our datasets represent all documents collected by our project. By contrast, our “collections” are subsets of these datasets filtered by keyword, source, or document type to help us address particular research questions. For instance, our Collection 1 (C-1) is a subset of data from our humanities_keywords dataset of 474,930 unique documents mentioning the word “humanities” (and phrases related to the humanities such as “liberal arts” or “the arts”). C-1 focuses on mainstream U.S. journalism, reducing the set to 82,324 articles mentioning “humanities” from 850 U.S. news sources. Other “collections” focus just on top-circulation newspapers, student newspapers, and so on, whose data is drawn from the same dataset or different datasets. (See the metadata tags we also add to our data to help analyze groups of publication sources.)

We make available nineteen of our collections for others to use and explore. (See the “cards” describing these on our Collections page.)

Topic Models

Topic Models

Each of our “collections” has a “Start Page” (example) that provides access to topic models of the texts in the collection that we created using the MALLET tool in a workflow conducted in our WE1S Workspace of Jupyter notebooks. (See our cards explaining “Topic Modeling” and “WE1S Workspace.”) We generated several models for each collection, each at a different granularity of numbers of topics (typically 25, 50, 100, 150, 200, and 250 topics). We make available these models as interactive visualizations on our website (see below) and also as downloadable MALLET data and other files that can be used by other researchers.

Visualizations

Visualizations

Our topic models come with a suite of interactive visualizations allowing users to explore their topics and associated words and texts (though due to copyright restrictions the links to texts show only word frequency counts and other data instead of readable articles). We call this suite of visualizations, some original and others adapted from existing topic-model interfaces, our Topic Model Observatory.