This page serves as a staging ground for creating the WE1S topic modeling interpretation protocol. Included are observations about using the DFR-browser interface, steps in reading a model, supplementary technical means of helping to read models, etc.

- Weighting Topics (using spreadsheet)

- Visualizing topics as word clouds (“topic clouds”), using Lexos

- How to Interpret Topic Models

Weighting Topics (using spreadsheet)

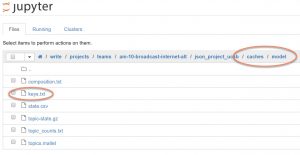

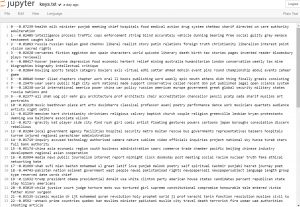

A. Go to your project’s cache/model folder on Mirrormask (in the “write” tree), and download the “keys.txt” file to your local hard drive (or copy the content of the file to your clipboard).

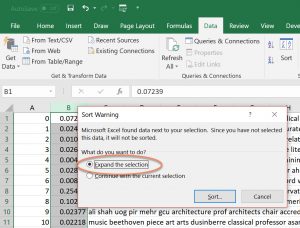

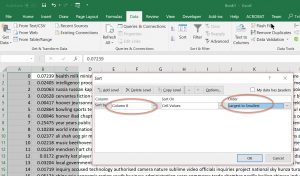

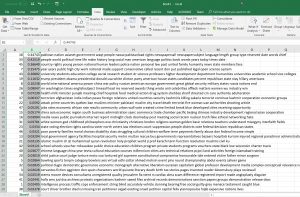

B. Copy and paste the content of keys.txt into an Excel spreadsheet (or Google sheet). Then select column B, go to the “Data” tab, and use “sort”. (In Excel, you will then have to “expand” the selection to sort the affiliated columns and also choose the column to sort on and the sort order.)

C. Then you will end up with a ranked list of topics. You can also color code the rows if you wish for different kinds of topics.

Visualizing topics as word clouds (“topic clouds”), using Lexos

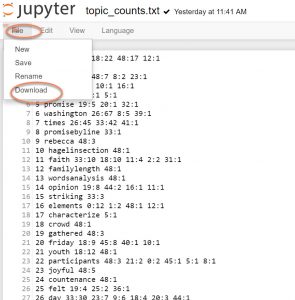

A. Go to your project’s cache/model folder on Mirrormask (in the “write” tree), and download the “counts.txt” file to your local hard drive.

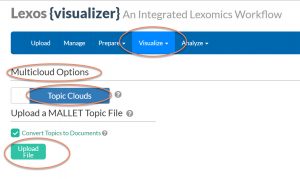

B. Go to the Lexos online site: http://lexos.wheatoncollege.edu. Then, under the “Visualize” tab, choose “Multicloud.” In the dialogue for the multicloud, toggle from “Document clouds” to “Topic Clouds.” Then upload your “counts.txt” file and ask Lexos to “get graphs”.

How to Interpret Topic Models

Resources

- Boyer, Ryan Culp Boyer. “A Human-in-the-Loop Methodology For Applying Topic Models to Identify Systems Thinking and to Perform Systems Analysis.” Masters thesis. University of Virginia, 2016. https://libra2.lib.virginia.edu/downloads/r207tp34z?filename=Boyer_Thesis_Dec2016.pdf.

- Chang, Jonathan, Sean Gerrish, Chong Wang, Jordan L. Boyd-graber, and David M. Blei. “Reading Tea Leaves: How Humans Interpret Topic Models.” In Advances in Neural Information Processing Systems 22, edited by Y. Bengio, D. Schuurmans, J. D. Lafferty, C. K. I. Williams, and A. Culotta, 288–296. Curran Associates, Inc., 2009. http://papers.nips.cc/paper/3700-reading-tea-leaves-how-humans-interpret-topic-models.pdf.

- Chuang, Jason, Sonal Gupta, Christopher D. Manning, Jeffrey Heer. “Topic Model Diagnostics: Assessing Domain Relevance via Topical Alignment.” International Conference on Machine Learning (ICML), 2013. http://vis.stanford.edu/papers/topic-model-diagnostics

- Evans, Michael S. “A Computational Approach to Qualitative Analysis in Large Textual Datasets.” PLOS ONE 9, no. 2 (February 3, 2014): e87908. https://doi.org/10.1371/journal.pone.0087908.

- “Evaluating Topic Significance — In addition to identifying qualitatively distinct topics in a large textual dataset, computational methods provide the necessary information to evaluate the significance of topics that the model identifies. From a topic modeling perspective, there are two basic ways to think about the significance of a topic. The first is to consider how commonly a topic occurs in the corpus as a whole, relative to other topics. If a reader encounters demarcation language, what subjects are they more likely to be reading about?Figure 4 reports labeled topics ranked by the Dirichlet parameter for each topic estimated by MALLET. In the MALLET implementation of LDA with hyperparameter optimization enabled, the Dirichlet parameter for each topic is optimized at regular intervals as the model is iteratively constructed. The greater the Dirichlet parameter for each topic in the resulting model, the greater the proportion of the corpus that has been assigned to that topic by MALLET. Ranking topics by Dirichlet parameter therefore answers the question of how commonly a topic occurs in the corpus as a whole, relative to other topics.”

- Posner, Miriam. “Very Basic Strategies for Interpreting Results from the Topic Modeling Tool.” Miriam Posner’s Blog (blog), October 29, 2012. http://miriamposner.com/blog/very-basic-strategies-for-interpreting-results-from-the-topic-modeling-tool/.

- Veas, Edurardo, and Cecilia di Sciascio. “Interactive Topic Analysis with Visual Analytics and Recommender Systems.” Association for the Advancement of Artificial Intelligence, 2015. https://www.researchgate.net/publication/279285547_Interactive_Topic_Analysis_with_Visual_Analytics_and_Recommender_Systems.

- Abstract: “The ability to analyze and organize large collections, to draw relations between pieces of evidence, to build knowledge, are all part of an information discovery process. This paper describes an approach to interactive topic analysis, as an information discovery conversation with a recommender system. We describe a model that motivates our approach, and an evaluation comparing interactive topic analysis with state-of-the-art topic analysis methods.”